AI Black Box Explained by AI Scientist

An AI blackbox is an artificial intelligent system where the internal decision-making process is not visible or understandable to the user.

In science, computing, and engineering a black box is a system that can only be observed through its input and output–what goes inside and what comes out–without knowing how it really works.

The name “black box” has been coined due to its mysterious and hidden properties from the user and it could be anything like a device, a machine, a program, a brain, or an organization.

But we’re here to discuss the black box in the context of artificial intelligence and the black box problem.

What are AI Black Boxes?

AI black box is an artificially intelligent system that can make decisions without providing further background of how it reached one as it only depicts the input and output for example AI chatbots like ChatGPT and Bard create content without showing the system’s code or logic behind output.

Even other AI image creators like DALL.E-2 and Midjourney fall under the umbrella of AI black boxes as we are not revealed how an image is created.

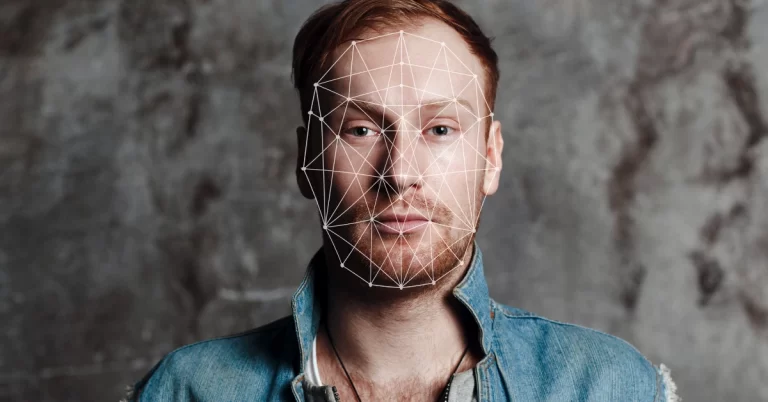

So basically, machine learning and I’m not just saying bots, even self-driving cars or facial recognition systems are under the realm of the infamous black box.

How Does Black Box Machine Learning Work?

The concept of blackbox machine learning works on assumptions; one cannot understand how the model functions well internally.

This is most commonly seen in deep learning algorithms that include numerous computational layers. Here’s a step-by-step breakdown of how blackbox machine learning typically works:

- Data Feeding and Pattern Recognition: To begin with, complex algorithms study vast data sets for trends.

This process supplies the algorithm with many data sets for self-development and training via iterative experimentation ( repeatedly testing and adjusting to improve results).

With this input, the model fine-tunes its internal parameter values until it can give accurate predictions of output for any new input. - Training Phase: Once it goes through the first stage of learning and adapting, the machine can learn and process real-world data and predict or make some decisions.

For instance, in fraud detection, the model may compute a risk score using the realized patterns. - Scaling with New Data: As the model gets more input data, it progressively enriches its strategies, know-how, and techniques accordingly.

This consistent transformation improves the validity of the model to support the relevant decision-making. - The opacity of Internal Mechanisms: In a nutshell, a black box AI consists of several calculations that have been put together in a way that no one knows the specific calculations or pathways resulting in the final result.

Thus, it becomes hard, and at times even the innovators find themselves unable to comprehend the reason behind a certain result. - Complex Networks of Artificial Neurons: Deep networks of artificial neurons arrange data and decision making between thousands of neurons in black box models.

The resultant scattering is equivalent to the complexity of the human’s thinking processes, making it difficult to recognize which are the inner components and causes of any certain intelligence algorithm.

Why are AI Black Box Models Used?

AI black boxes are mainly used because of their higher predictive accuracy than other interpretable models and their ability to handle complex data.

It gets to the point that those who designed the black box are unable to understand the functional process a computer vision of a natural language model goes through, so it’s no surprise that fixing could be a complicated task if the black box malfunctions.

Perhaps, you could say that their algorithmic data pattern is a hard catch and maybe a problem that could get anyone canceled…

We’ll talk about that soon but all you need to know is that black boxes and their use is still a questionable paradigm in the artificial intelligence field.

When Should Black Box AI be Used?

Black box AI is much needed for various applications where its ability to handle complex tasks and provide accurate predictions. Certain fields include:

1. Financial Sector: Organizations use black box AI for approving loans and identifying fraudulent transactions.

2. Healthcare: In diagnostics, drug discovery, and personalized treatment plans, black box AI can analyze complex medical data.

3. Pattern Recognition: It excels in recognizing complex patterns in large datasets, tasks that are challenging for human analysts. This capability is particularly useful in industries dealing with vast amounts of data.

4. Efficiency: Black box AI is known for its efficiency in processing and analyzing data, making it a valuable tool in various sectors.

Despite these strengths, the opacity of black-box AI systems also presents challenges in understanding and explaining their decision-making processes.

Efforts to clarify black box AI focus on creating explainable methodologies that are understandable at a human cognitive scale, aiming to outline the factors and logic behind AI decisions.

This balance between efficiency and transparency is crucial in determining the appropriate use cases for black box AI.

The Black Box Problem: The Challenges of Black Box AI No One Addresses

Jacky Alciné, a software engineer, pointed to a crucial malfunction with the recognition algorithm of Google Photos that classified his black friends as “gorillas”.

Google claimed that it was “appalled and genuinely sorry” apologizing to Alciné and promised to get rid of the problem but Google hasn’t fixed anything yet. In fact, they blocked the image recognition system from identifying gorillas at all–limiting than risking another cancellation alarm.

This goes to show that even the largest commercial organization wasn’t able to scoop out the actual underlying issues behind the misinterpretation performed by their image recognition system. Now why are we talking about this?

Though black boxes are effective in deep neural networking, such as large databases and parameters, these models could garner unfavorable outcomes and reduce the trust factor from its users—limiting their usage in fields of medical treatment, loan approvals, or job interviews.

How these black box AI models come to a conclusion is a big question mark and their predictions are opaque enough to fall unreasonable.

This could cause serious bias and accountability issues as the challenge to identify why a biased output was created lingers more than ever, making innovation and improvement an almost impossible task.

Black Box vs White Box Testing

Both modules are equally essential for software testing and the approach relies on testing objectives, the testing stage as well as the available resource.

First and foremost, unlike the black box that keeps its internal working confidential, white box testing allows the tester to gain insight into the inner workings of the software and allows testing of code snippets, algorithms as well as other methods.

While black box testing is done to ensure the functionality of the software meets the criteria and specifications, the white box test is conducted to ensure the internal coding of software is correct and working efficiently.

That being said, black box testing barely requires any knowledge of the internal performance process of software but white box testing..Well, it requires just more than that—from programming languages and software architecture to design patterns.

Another significant difference is the methods. Black box testing uses equivalence partitioning, boundary value analysis, and error guessing to create test cases whereas white box testing uses control flow testing, data flow testing, and statement coverage technique.

It’s evident that both modules are treated differently. Still, white box testing is relatively more effective in detecting internal defects and maintenance.

Still, you need a bit more time to spare if compared to black-box testing—it’s easy to use and requires no programming knowledge to detect functional issues.

Solutions to the Black Box Problem

To address the issues of the black box’s opacity and inaccuracy, researchers are working to develop models like Explainable AI (XAI) and Hybrid AI that could interpret better and perform without any bias or unforeseen functioning.

- Explainable AI (XAI): These models are designed to become more transparent and interpretable, especially while handling complex decisions.

They work by providing a better view and reasoning than black box models through techniques that enable them to generate explanations for their decision.

- Hybrid AI: Hybrid AI is a strong combination of black-and-white box models to create an effective, transparent, and interpretable system that can cancel out the flaws of the other two respective systems.

Sandwiched between the accuracy of the black box and the transparency of the white box, the hybrid AI can wither from the complexities of the other two.

What’s Next?

University of Michigan Dearborn Associate Professor Samir Rawasheh said:

“Artificial intelligence can do amazing things that humans can’t, but in many cases, we have no idea how AI systems make their decisions.”

The black box problem is a recurring problem especially during the zenith of the AI revolution, due to its hazy and incomprehensible nature.

This may have sparked controversies and a lack of trust to take it forward.

Explainable AI, Hybrid AI, and Human-in-the-loop (HITL) which involves human feedback and oversight in the AI decision process could be proposed as a solution.

Let’s say these solutions are limited in terms of scalability, complexity, accuracy, and bias so the future is still… a little bleak but it’s clear that this could either become a successful leap or a grimy failure.