What is Generative AI and How Does it Work?

Generative artificial intelligence (AI) is a category of AI algorithms, most likely machine learning–that can create new content based on the data they have been trained on.

It uses a type of deep learning called generative adversarial networks (GANs), which consist of two competing neural networks: a generator and a discriminator.

Generative AI has a wide range of applications, such as creating images, text, audio, video, software code, and product designs.

For example, generative AI can be used to create realistic faces of people who do not exist, generate captions for images, synthesize speech from text, or design new drugs. Generative AI can also help with data augmentation, privacy preservation, and content creation.

Does something seem off about the paragraph above? Maybe, No. Ideal grammar, punctuation, sentence structure, and words. Well, that’s because it was created using ChatGPT, a generative AI chatbot.

By 2025, 10% of all the data produced globally will be done by generative AI, and as scary as it sounds, you must acknowledge this wonderful yet scary realm of AI’s future.

How Does Generative AI Work?

Generative AI works on a type of deep learning called generative adversarial networks (GANs), which consist of two neural networks.

- The discriminator: distinguishes between real and fake output

- A generator: produces realistic outputs to fool the discriminator

The generator improves its output while the discriminator learns to increase its accuracy through this process.

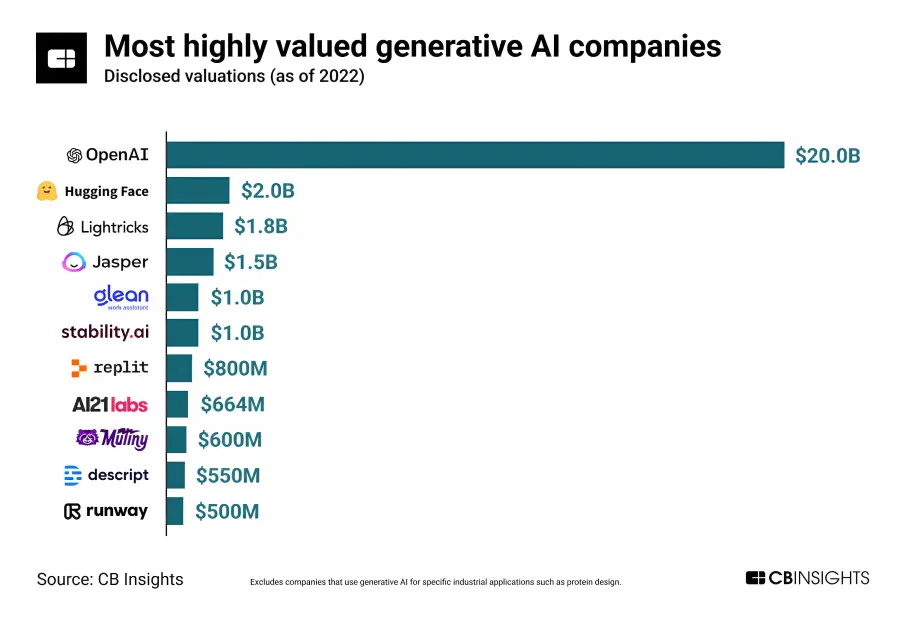

While many companies are now taking a leap with generative AI, OpenAI was among the first to deliver a product based on GAN. Generative AI can be used to create realistic faces of people who don’t exist, image captions, speech-to-text synthesis, and visualize an image.

Generative AI History

1. Early Beginnings (1930s-1950s):

Although generative AI has become a sudden surge, it traces its roots back to the 1930s—the period that witnessed the conceptual foundation of AI but lacked any major commercial progress until later on.

The term “machine learning” was invented in 1959 by Arthur Samuel procuring the development of the first self-learning program for playing checkers.

Frank Rosenblatt, around the same time, introduced the perceptron, “the first machine which is capable of having an original idea,” according to its creator, Frank Rosenblatt ’50, Ph.D. ’56.

The academic discipline of artificial intelligence was formally established at a workshop at Dartmouth College in 1956 that sparked philosophical and ethical discussions about AI and its implications.

2. Exploratory Phase (1960s-1970s):

The exploratory phase was flashing in the 60s as Joseph Weizenbaum created Eliza an early and almost first chatbot of generative AI. Then by the early 1970s, AI was slowly being inculcated into artistic works

By the early 1970s, artists like Harold Cohen began using AI to create artistic works by creating a program called Aaron— a groundbreaking fusion of technology and creativity.

3. Technological Advancements (2010s):

In 2014, the development of the variational autoencoder and the generative adversarial network marked a turning point. These were the first practical deep neural networks capable of learning generative models and could generate complex data like entire images.

The introduction of the Transformer network in 2017 and the subsequent development of GPT-1 and GPT-2 in 2018 and 2019, respectively, revolutionized generative models. GPT-2, in particular, demonstrated the ability to generalize unsupervised to various tasks.

4. Recent Milestones (2020s):

The release of DALL-E in 2021, a transformer-based pixel generative model, was a significant milestone. It, along with others like Midjourney and Stable Diffusion, marked the emergence of high-quality AI art generated from natural language prompts.

In 2023, GPT-4 was released, with some considering it an early version of artificial general intelligence.

However, this view is not universally accepted, with some scholars arguing that generative AI is still far from achieving general human intelligence.

This history underscores the multifaceted development of generative AI, from early theoretical concepts to modern applications in various industries. The field has evolved significantly, driven by both technological advancements and creative applications.

Generative AI Use Cases

- Customer Experience: Enhances service with chatbots and virtual assistants, improving engagement.

- Employee Productivity: Aids in information retrieval and automates report generation.

- Creativity & Content: Creates engaging marketing content and optimizes product design.

- Process Optimization: Automates document processing and data extraction for efficiency.

- Healthcare: Assists in medical diagnostics and personalizes treatment plans.

- Life Sciences: Accelerates drug discovery and optimizes clinical trials.

- Financial Services: Manages investment portfolios and automates financial documentation.

- Manufacturing: Enhances product design and operational efficiency.

- Retail: Optimizes store layouts and enables virtual product experiences.

- Media & Entertainment: Produces content at scale and personalizes user experiences.

- Additional Creative Applications: Generates news, images, videos, and music.

- Diverse Applications: Includes image and music generation, data creation, and customer service.

Benefits of Generative AI

- Automates repetitive and time-consuming tasks which reduces the time and effort required to complete them.

- Provides tools for creating new and innovative designs, content, and ideas that might not be possible through traditional methods.

- Can generate personalized content, products, and services tailored to individual preferences and needs, enhancing user experience.

- Helps in cutting down operational costs by automating tasks and processes that would otherwise require significant human labor.

- Creates synthetic data, which is particularly useful for training machine learning models where real data is scarce, sensitive, or imbalanced.

- Can analyze large datasets to identify patterns and insights, aiding in more informed and strategic decision-making.

- Enables businesses to scale their operations, particularly in content creation, without a proportional increase in resources or costs.

- Helps in maintaining high quality and consistency, especially in content generation and design tasks.

- In fields like product design and development, it allows for quick generation of multiple prototypes, speeding up the innovation process.

- Assists in drug discovery, personalized medicine, and medical diagnostics, contributing to advancements in healthcare.

- Enhances customer engagement through personalized experiences and efficient customer service solutions like chatbots.

- In sectors like finance, it helps in modeling different scenarios, aiding in risk assessment and mitigation strategies.

Limitations of Generative AI Models

Evaluating Generative AI Models

One way to evaluate generative AI models is through manual inspection of generated outputs and comparing them with real data to check for accuracy, coherence, and relevance of the respective output, as well as identify any artifacts or errors.

But manual evaluation is subjective, time-consuming, and not scalable for large complex dataset models.

Another way is to use qualitative methods like user surveys, interviews, or feedback., which can help understand user satisfaction, preference, and perception of the generated outputs. We’ll be able to understand the needs and expectations of the user, however, this is also subjective, biased, and reliant on the context.

A third way to evaluate generative AI models is to utilize quantitative methods like metrics or scores that help measure the similarity and difference between generated outputs vs real data. Some of them are:

- Inception Score (IS): This metric uses a pre-trained classifier to assign labels to the measured generated images based on how realistic and diverse they are. A high IS infers that images are both realistic (have high conditional probability given their labels) and diverse (have low marginal probability over all labels).

- Fréchet Inception Distance (FID): This metric uses a pre-trained classifier to know how similar the distribution of generated and real images are in order to extract their features. A low FID equals the image being both realistic (have similar mean and variance of features) and diverse (have different features across images).

- BLEU Score: This metric measures via n-gram matching–a contiguous sequence of n items where an item can represent a character, word, or subword based on the context.

The metric checks the similarity between the generated and reference text using n-gram matching so, a high BLEW score means the text is both fluent (has high precision of n-grams) and relevant (has high recall of n-grams).

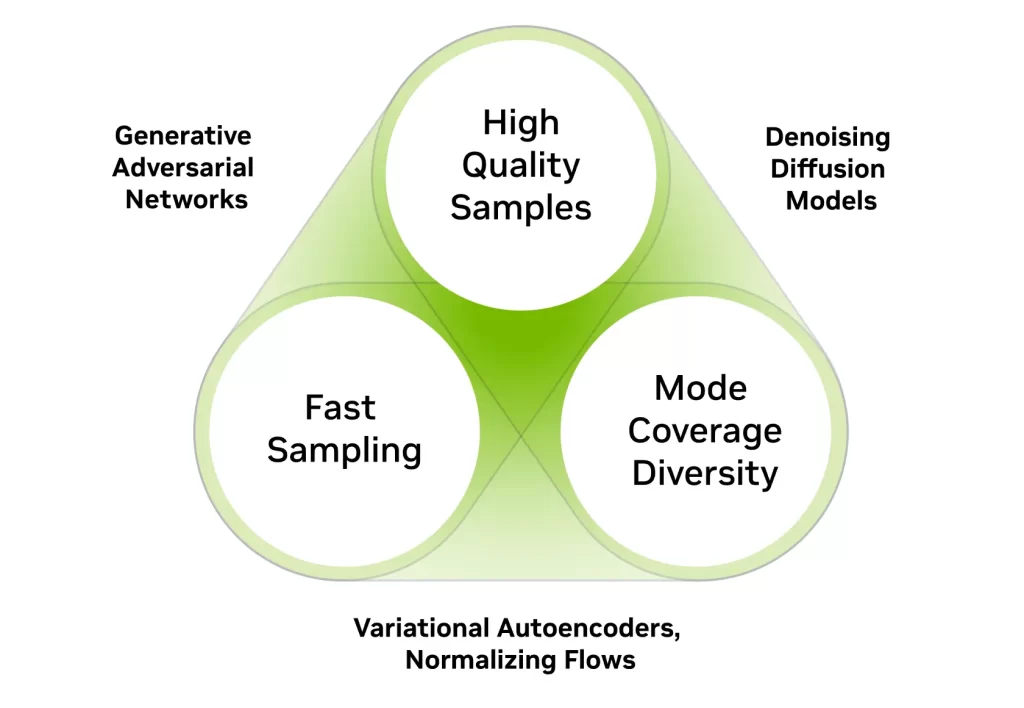

Once the Generative AI models are evaluated, they’re checked for three major requirements to determine a successful generative AI model.

- Quality: The generated outputs should be realistic, relevant, and rational to the input data and task for example: Poor speech quality is difficult to understand in speech generation, and in image generation, the desired output must be visually indistinguishable from natural images.

- Diversity: The generative model must capture the complexity and range of data distribution without compromising on the quality. This reduces the unwanted bias of learned models and enhances the creativity of outputs. For instance, in text generation, the model should produce highly diverse sentences which express different meanings and styles. More perplexing and bursty sentences are less likely to be flagged by AI detectors.

- Speed: The model should produce quick and efficient outputs, especially for interactive applications like chatbots, such as ChatGPT OR Bard that require real-time responses. If you’re using an image editor like Picsart, the model should be able to modify or enhance the image in seconds.

These criteria are not easy to meet due to frequent tradeoffs for example, one may require more training data or computational resources to accelerate the quality and diversity of the output. This can potentially affect the speed or scalability of the generative model.

Applications of Generative AI

Generative AI has been and will be the most strategic technology, some of which are used in several fields like art and music, design, pharmaceuticals, gaming, and marketing. Here’s how it’s used.

- ChatGPT: AI chatbot developed by OpenAI which enables users to refine and establish conversations toward a desired length, style, format, detail, and language.

- MidJourney: A program that generates images from text descriptions called prompts, similar to Dall-E and Stable Diffusion.

- Synthesia: AI video maker that lets users create videos from text prompts using AI avatars and voiceovers in over 120 languages. It’s less time-consuming and saves up to 80% of the cost.

- Amper Music: An AI composer, performer, and musician that also helped create several AI songs. “I AM I”, a music album by Taryn Southern was entirely created using this technology.

- Stitch Fix: An online personal stylist, with AI and deep learning forming its backbone. This website selects clothing items for customers based on their style preference

- NVIDIA’S Omniverse: A platform to help artists create 3D models more efficiently.

- Insilco Medicine: The company uses AI for drug synthesis through AI generative techniques to create new DDR1 kinase inhibitors in just 46 day

Is Generative AI the Future?

One can’t scale and predict generative AI as a single monolithic entity but as a broad and dynamic landscape of various models or applications capable of augmenting human intelligence. The future is entirely dependent on how we develop, execute and govern these models to balance risk and opportunity.

Yes, generative AI would be the future. It won’t be a generalist tool for just chatbots and information but a niche specialized model to apply in different domains. The market size will grow from USD 9.8 Billion in 2022 to USD 56.5 Billion by 2028.

Even generative AI and Web3 (a term for decentralized and distributed web powered by blockchain and other technologies) will be like peanut butter and jelly on fresh bread — but with code, infrastructure, and asset portability.

If we scale to tell about the future, niche generative AI is more likely to come forth as it can comprehend domain-specific data and knowledge to generate more realistic and relevant outputs.

Niche generative AI is ChatGPT, Github co-pilot, Stable Diffusion, and all tools designed for a specific niche domain and can integrate with the existing workflow. Companies are opting for niche generative AI and over 250+ are entirely based around the idea and more will rise as part of the AI hype cycle.

Generative AI Models

Generative AI models blend different AI algorithms to interpret and create content. In text generation, they use natural language processing methods to turn basic elements like letters and words into structured formats like sentences and parts of speech.

These are then converted into vector representations using several encoding strategies. Similarly, images are processed into distinct visual components, also represented as vectors.

It’s important to note, however, that these methods can inadvertently incorporate biases or misleading elements present in the training data.

Once a suitable representation method is chosen, developers utilize specific types of neural networks to produce new content based on prompts.

Techniques such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), which feature both encoder and decoder components, are particularly effective for crafting realistic human likenesses, generating synthetic data for AI training, or creating accurate replicas of specific individuals.

The advancement in transformers like Google’s BERT, OpenAI’s GPT series, and Google’s AlphaFold has further enhanced these neural networks’ capabilities.

They now not only encode complex information like language, imagery, and biological structures but also generate novel content based on this encoded data.

These advancements have significantly expanded the potential applications of generative AI, making it a versatile tool in fields ranging from linguistics to bioinformatics.

Generative AI vs. AI

In general, generative AI and conventional AI’s primary distinction is within their ability set and goals. Here’s a breakdown of the key differences:

1. Creation of New Content: This is what makes generative AI distinct – it generates new things. Learning to identify those structures that are hidden in large amounts of previous data. It thus learns in the form of those learned patterns and produces new, original content based on such patterns.

2. Umbrella Category: Generally, Artificial Intelligence includes every piece of equipment designed to imitate human reason. This broad category includes generative AI, which focuses on machines capable of generating new products.

3. Data Analysis and Task Performance: Traditional AI concentrates on the analysis of data for performing particular activities, generating insights as well as predictions concerning the future. These works depend on direct programming for their execution.y

4. Role of Prompts in Content Generation: Typically, generative AI begins with an input in terms of a specified prompt which enables people to include a query as well as necessary data to help formulate the content. This is a more playful and imaginative form than traditional AI is more organized and workflow-based.

5. Underlying Principles and Functionalities: While traditional AI relies on explicit programming for the execution of task-specific operations/functions, generative AI is based on machine learning techniques. The inherent difference in the principles and function makes it evident that generative artificial intelligence is vibrant, and creative while traditional artificial intelligence involves rigid rules.

In short, as far as traditional AI is concerned, it focuses mainly on the analysis of available datasets to make prearranged decisions. But in the case of generative AI, this is no longer enough because this type of AI generates data independently showing its creativity aspect.