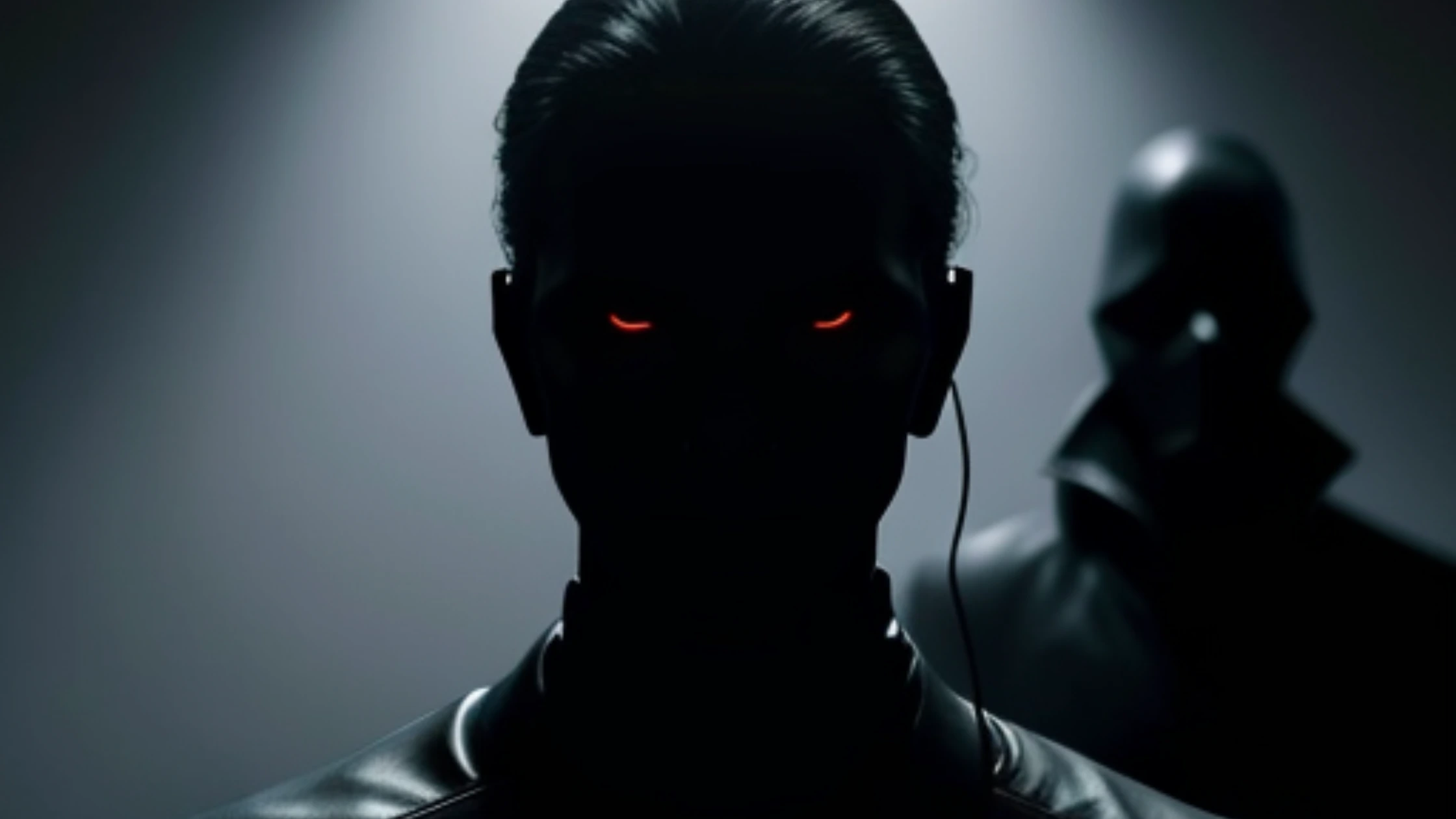

AI Voice Cloning Scams Are On the Rise! Here’s How To Save Yourself

AI voice cloning scams occur when scammers record a person’s voice or find an audio clip on social media, then pass it through AI software like ElevenLabs or Descript to generate a realistic voice clone.

The cloned voice may be used to spark panicked calls to family or friends, convincing the unwitting recipient to give money or access to sensitive information.

In 2019, a CEO was scammed and robbed of $243,000 after a scammer cloned an AI-generated voice of the CEO’s boss and requested to transfer funds.

Similarly, an Arizona family received an AI-generated ransom call which was misunderstood as the lady’s daughter being kidnapped while she was away on a skiing trip.

The callers used AI voice cloning to mimic her daughter’s voice, making the call sound convincing.

According to a recent report by McAfee, 83% of Indians have lost their money due to voice cloning scams and 69% of Indians are unable to distinguish between an AI and vs human voice.

The voice cloning scams have been scaled into forged call centers that ping around the world to scam on an international level.

AI voice cloning is a technology that uses machine learning to simulate a specific person’s voice–creating a near-identical voice of a speaker to mimic everything from syllable pronunciations to intonation patterns. One shouldn’t mix it with speech synthesis (predefined voices to replace speech).

AI voice clones are used to impersonate celebrities, generate podcasts, audiobooks, and voiceovers, create personalized messages, and social media posts, and enhance the immersion of video games, movies, or animations.

The Threat of AI Scams

The security and privacy of individuals are at serious stake when it comes to AI voice cloning and it can cause serious financial fraud.

Several people have been victims of identity theft, and emotional manipulation by the use of fake voices to deceive, coerce, or blackmail victims.

How does that happen? AI voice cloning scams can perform some malicious wonders of machine learning and here are some of them:

- Impersonate a trusted or close person such as a family member, colleague, or friend to extract any personal information, money, or access to confidential accounts.

- Pretend to be a bank, organization, government, or agency to demand and demand payment, verification, or confirmation of sensitive details.

- Mimic is the voice of an influencer, a celebrity, or a public figure to spread fake rumors and false information that can endorse their product or harm their repute.

- Generate fake recordings or audio evidence to cause damage to someone’s reputation, credibility, or legal rights.

Types of AI Voice Cloning Scams

Grandparent Scam

In this case, the scammer calls the victim pretending to be a close relative or grandchild to demand immediate money due to any alleged crisis. The scammer may claim that he’s been in an accident, arrest, or a major problem, only to ask the victim to inform the parents.

Sometimes, the scammer may hand the phone over to someone posing as a lawyer seeking immediate payment with a spoofed caller ID to make things legitimate.

Unfortunately, bad actors can now use artificial intelligence technology “to mimic voices, convincing people, often the elderly, that their loved ones are in distress”.

Grandparent scams and related cons are common, with more than 91,000 complaints logged by the FTC from 2015 through the first quarter of 2020.

1. Tech Support Scam

This is one of the most common scams especially originating from Indian call centers that pretend to pose as tech support specialists who use AI voices and claim the victim has a problem with their computer.

To prove their legitimacy, they utilize a fake caller ID and ask the victims to provide them remote access to their computer—then run a diagnostic test to inform them that one of the files shows a problem despite there being none.

Then they offer fake solutions ” and ask for payment as a one-time fee or subscription to a purported support service.

2. Phishing Scam

Phishing is an old-school method of scamming where a scammer sends an AI-written email or text message which appears to be from a trusted company, with a link attached to it.

The link, when clicked takes the victim to a fake site that seems authentic. Then the scammer requests the victim to insert his personal information such as passwords, account numbers, or Social Security numbers to hack into the victim’s personal account

Phishing emails and text messages narrate a story to trick the victim into clicking on a link or opening an attachment. It could be a message from an online payment website or app. The scammer might say they’ve noticed some suspicious activity or log-in attempts, even if they haven’t.

3. Romance Scam

Romance scams are similar to ‘catfishing’ where a scammer creates a fake profile and pretends to be something they’re not.

They build a relationship and request money after manipulating or grooming their victim to gain their trust over a period–ultimately to either steal their identity or rob them of their wealth.

Since the advent of dating profiles like Tinder and Grindr, several people have fallen victim to these scams.

A Netflix series called “Tinder Swindler” was a real-life documentation of a scammer who had fished multiple women to possess their money.

How to Protect Yourself from AI Voice Cloning Scams?

A common fraud that you need to be aware of is the usage of AI voice cloning to convince you that the caller is someone you know or trust and they may urge you to send them money, buy gift cards, or wire funds.

If in doubt, always call the actual relative whose voice is being cloned or ask your family members to verify the caller.

Secondly, come up with a code word with the person you think might need your immediate help in the future and agree on a code only between the both of you.

When in an emergency, ask them to say the code word, and if they can’t, hang up to report the call

Also, information is really important for any scammer to get the best of you so don’t give them the sensitive information right away—be it someone sounding like your boss, bank or service provider.

If you’re not sure if the caller is who they say they are, try to catch them off guard by asking them questions that are hard to answer with a generic script.

For example, if they claim to be your friend, ask them about something specific that happened recently or something only you two would know. If they hesitate, repeat themselves, or give vague answers, they might be using AI voice cloning to fool you.

Conclusion

As AI embarks on its peak journey, the threats of AI voice cloning will only get worse and this evolving threat must be taken care of.

Everyone must be aware of the dangers and malice of such prevalent calls and if you think you have been the victim of such clones, report it to the cyber authority immediately