Catastrophic Forgetting: Can AI Learn Without Forgetting?

Can you remember what you learned yesterday? Or the day before yesterday? Maybe, let’s go back to a month or when you learned photosynthesis in class 5. No, because our brain can only retain as much information and spew the rest.

Neural networks are just like your brain–they learn new things but can also forget what they learned before.

This sudden recurring problem in AI systems is called catastrophic forgetting and has posed a challenge for tech firms who’re based around niche generative artificial intelligence.

Catastrophic learning or catastrophic interference in AI is the obstruction for machine learning where artificial intelligence systems lose previous information from previous tasks as they move on to the next, for example, self-driving cars forget basic safety rules and can cause harm.

A Tesla Model S in Texas, US killed 2 men in the front passenger seat and back seat after it crashed into a tree and caught fire.

Why does Catastrophic Forgetting Occur?

Interference: When the network learns new information, it updates its biases and could potentially overwrite or interfere with the previous information stored in the network.

When this happens, the network loses its ability to recall and generalize previous information.

Plasticity-stability trade-off: When the network learns new information, it has to learn to balance between being plastic (adaptable) and being stable (robust).

If the network is too plastic, it may not remember the old information and it may be difficult to balance.

Representational overlap: When the network perceives new information, it could use neurons or features used in the previous information, which may cause conflict or confusion between various tasks. This may affect the stability of the network to discriminate between tasks

There may be a Solution…

Sleep. Yes, sleep can be used to solve the issue of catastrophic forgetting. But the answer isn’t just a simple yes or no.

Researchers have suggested that sleep is important to retain memory and learning in humans as it helps integrate new experiences into a long term memory.

“Reorganization of memory might be actually one of the major reasons why organisms need to go through the sleep stage,” says study co-author Erik Delanois, a computational neuroscientist at the University of California, San Diego.

Previous studies have attempted to solve this issue by trying to simulate sleep. For instance, when neural networks learned a new task, they received the data learned previously to help them remember their past knowledge. This was thought to resemble how the brain works during sleep—old memories are replayed.

However, this method assumed that when neural networks learn something new, they would need all data used to learn old tasks. This process was not just time-consuming and data-intensive but varied from mimicking real brain activity during sleep–they don’t keep or replay all data from old tasks.

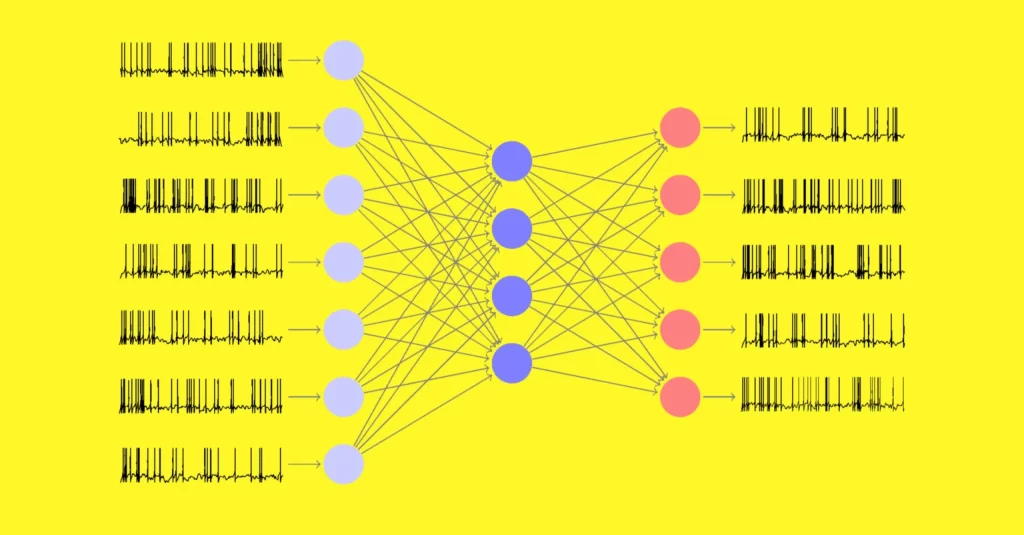

Researchers investigated the causes of catastrophic forgetting and the role of preventing sleep by using a different neural network called the “spiking neural network”, one similar to the human brain.

In artificial neural networks, units called neurons receive data to work together and solve a problem, such as recognizing faces.

The network adjusts the synapses—the connections between neurons—and checks if the resulting behaviors are better at finding a solution. Over the span, the network learns behaviors to solve problems or uses the behavior as default (copying the learning process of the human brain).

Simplifying the Solution: How to End Catastrophic Forgetting?

The output of a neuron is constantly changing in number, based on the input it receives in most artificial neural networks–similar to biological neurons sending signals overtimes.

But when it comes to spiking neural networks, things work biologically as these neurons spike or produce output signals only when they receive the input signals within a specified time frame.

It could be hypothesized as light turning on only if enough people were present in a room.

Spiking neural networks spike occasionally, so they rely on less data usage as compared to typical neural networks and demand less energy to communicate bandwidth to function properly.

Their efficiency makes them useful as their behavior resembles memories and changes in an abstract synaptic space, similar to sleep.

“The nice thing is that we do not explicitly store data related to earlier memories to artificially replay them during sleep to avoid forgetting,” says Pavel Sanda, a computational neuroscientist at the Institute of Computer Science of the Czech Academy of Sciences and another author of the study.

The researchers found that their strategy helped prevent catastrophic forgetting because the spiking neural network was able to perform both tasks after going through sleep-like periods.

They noted that this strategy helped keep the patterns of synapses related to both old and new tasks.